Let’s say you’re strolling through an art museum, stopping every few feet to admire a work you’re particularly fond of. A Picasso on the left, a Matisse on the right.

But something stops you before you step away from Picasso. It’s almost like it’s ... running off the frame, about to leap into your purse.

You think your eyes are deceiving you. You knew Picasso’s art was a little ... other-worldly ... but this? What is this sorcery? Am I at an art museum, or the fifth dimension?

The system could revolutionize museum exhibits and add another layer to virtual reality gaming. (Photo: UW Reality Lab)

Either way, you’ve just seen a still, single image transform into a 3D-animated character. How, you wonder?

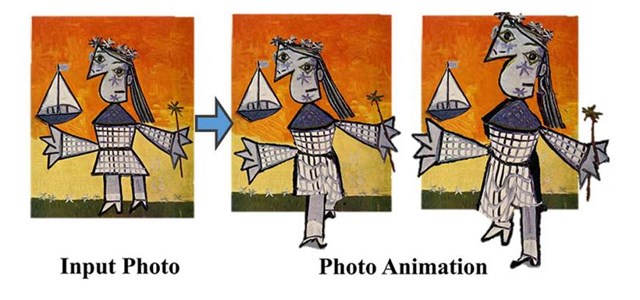

If you watched the video at the top of this article, you now know that such technology is possible – thanks to three researchers from Facebook and the University of Washington. They’re calling it “Photo Wake-Up,” and it’s essentially constructing a body model based on a still image and estimating a body map for that model. From there, the researchers can design a 3D mesh, apply textures to the mesh that match the body map, and integrate a skeletal rig for controlling the motion of the figure.

A variety of still images, including cartoon characters, portraits and abstract paintings, can be animated using the Photo Wake-Up system.

Industry experts are calling the system “seriously impressive” and credit UW’s Reality Lab, in which both Google and Facebook have invested, as a hotbed of this type of innovation.

A co-founder of the tech, Israeli native Ira Kemelmacher-Shlizerman, is the triple threat behind Photo Wake-Up: She’s a Facebook research scientist, an assistant professor at UW, and founder and co-director of the UW Reality Lab. Last year, she came up with a novel way to make cartoonish avatars look like they’re really playing musical instruments. And for several years, she’s been researching ways to more accurately develop age progression photos.

Last summer, Kemelmacher-Shlizerman was part of a team of UW researchers who created a machine-learning algorithm that can convert 2D YouTube clips into 3D reconstructions. Experienced through an augmented reality headset like the Microsoft HoloLens or the HTC Vive Pro, the system places a virtual representation of the match on any real-world flat surface. Those viewing the simulation can then walk around or move in close to see key pieces of the action. Take, for example, a real-world soccer match broadcast on an office desk:

And two years ago, she developed a search engine that lets users superimpose their faces on dozens of different hairstyles and looks.

“Our photos and videos tell a ton about ourselves, our histories, how people grow, age, learn to walk, and change over time,” Kemelmacher-Shlizerman, who graduated from the Weizmann Institute of Science in Israel, told GeekWire. “Exploring and learning from that data will enable magical applications in telepresence, health, sports, entertainment and many other unexpected ones. Enabling all this in 3D will create novel augmented and virtual reality content. This will be a breakthrough in how we communicate, and enable a much more connected world.”

Dreambit uses combined algorithms to show users how they’d look with different hairstyles. (Photo: Ira Kemelmacher-Shlizerman/University of Washington)

Dreambit uses combined algorithms to show users how they’d look with different hairstyles. (Photo: Ira Kemelmacher-Shlizerman/University of Washington)