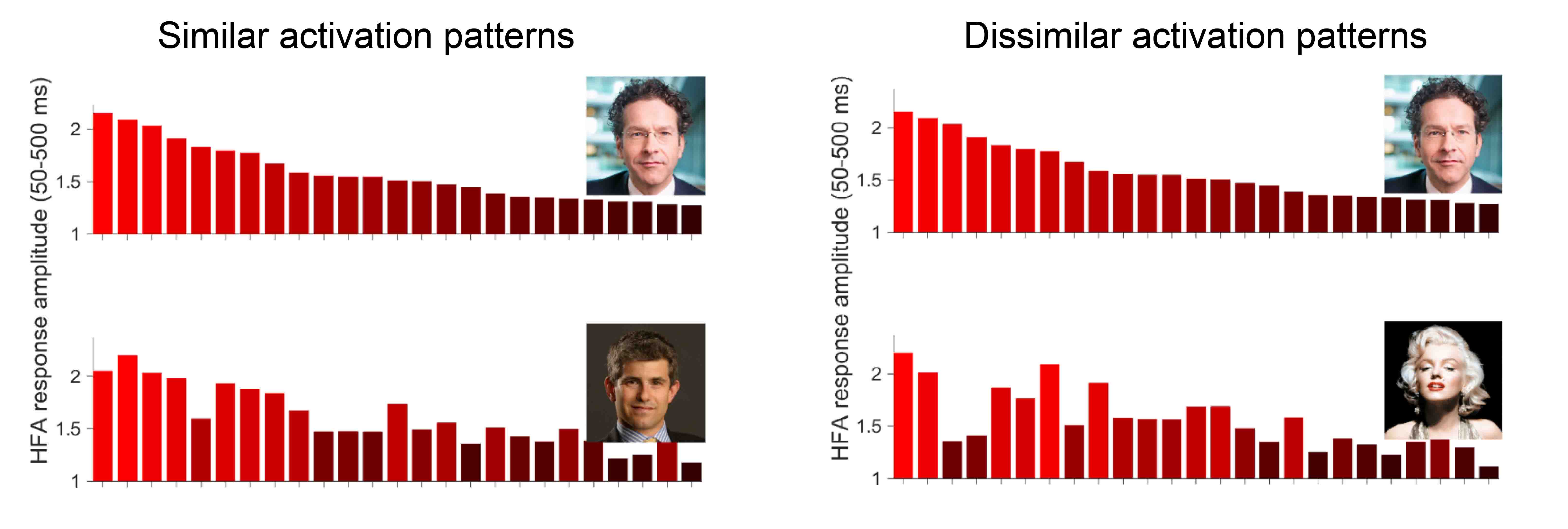

Pairs of face images that elicited similar (left column) and different (right column) neuronal activation patterns. Each bar shows the response of one electrode to the face in the photo; the higher the bar and the lighter the red, the stronger the response

REHOVOT, ISRAEL—October 30, 2019—Our brains are so primed to recognize faces – or to tell people apart – that we rarely even stop to think about it, but what happens in the brain when it engages in such recognition is still far from understood. In a new study published in Nature Communications, researchers at the Weizmann Institute of Science have shed new light on this issue. They found a striking similarity between the way in which faces are encoded in the brain and successfully performing artificial intelligence (AI) systems known as deep neural networks.

When we look at a face, groups of neurons in the visual cortex are activated and fire their signals. In fact, certain groups of neurons respond selectively to faces but not to other objects. But how does the activation of individual neurons come together to produce face perception and recognition?

Prof. Rafael Malach of the Department of Neurobiology and Shany Grossman, a PhD student in his group, decided to address this question by comparing human brain activity with deep neural networks. These computing systems, which recently revolutionized the field of AI, are trained to perform tasks by learning from enormous data sets. In the past few years, they have improved so dramatically that they now perform as well as, or even better, than humans on a variety of visual tasks, including face recognition.

Grossman and Guy Gaziv, a research student in the Department of Computer Science and Applied Mathematics, analyzed data obtained from 33 individuals in the lab of Dr. Ashesh Mehta of the Feinstein Institute for Medical Research at Northwell Health in New York. These unique subjects are epilepsy patients with electrodes implanted in various regions of their brains for diagnostic purposes, and who volunteered to participate in research tasks.

As the participants were shown a series of faces from different image databases, including famous and unfamiliar individuals, their brain activity was monitored via recordings from 96 electrodes implanted into the part of the brain responsible for face perception. The recordings showed that each face evoked a unique pattern of neuronal activation, involving different groups of neurons that fired at different intensities. Interestingly, some pairs of faces elicited similar-looking brain activity patterns – that is, they had similar activity “signatures” – whereas others elicited activation patterns that differed greatly from one another. The scientists were curious to know whether these activation signatures play an important role in our ability to recognize faces.

They decided to compare the human face recognition system with that of a deep neural network that had similar face recognition capability. This network, loosely inspired by the human visual system, contained artificial elements, analogous to neurons, arranged in some two dozen “layers.” To recognize a person’s face, the artificial neurons in each layer selected and combined different facial features – from the simplest ones such as lines and primitive shapes, to more complex ones such as parts of the eye and other facial fragments, to ones that definitively identify a person.

The researchers reasoned that if the face-coding patterns they found in the human brain were critical for allowing humans to recognize faces, such signatures should also be found in the artificial network. To test whether this was the case, they presented the network with the same images of faces shown to the human volunteers. They then checked whether these faces elicited sets of face-exclusive activation patterns with the same diversity and structure as the ones detected in the human brains.

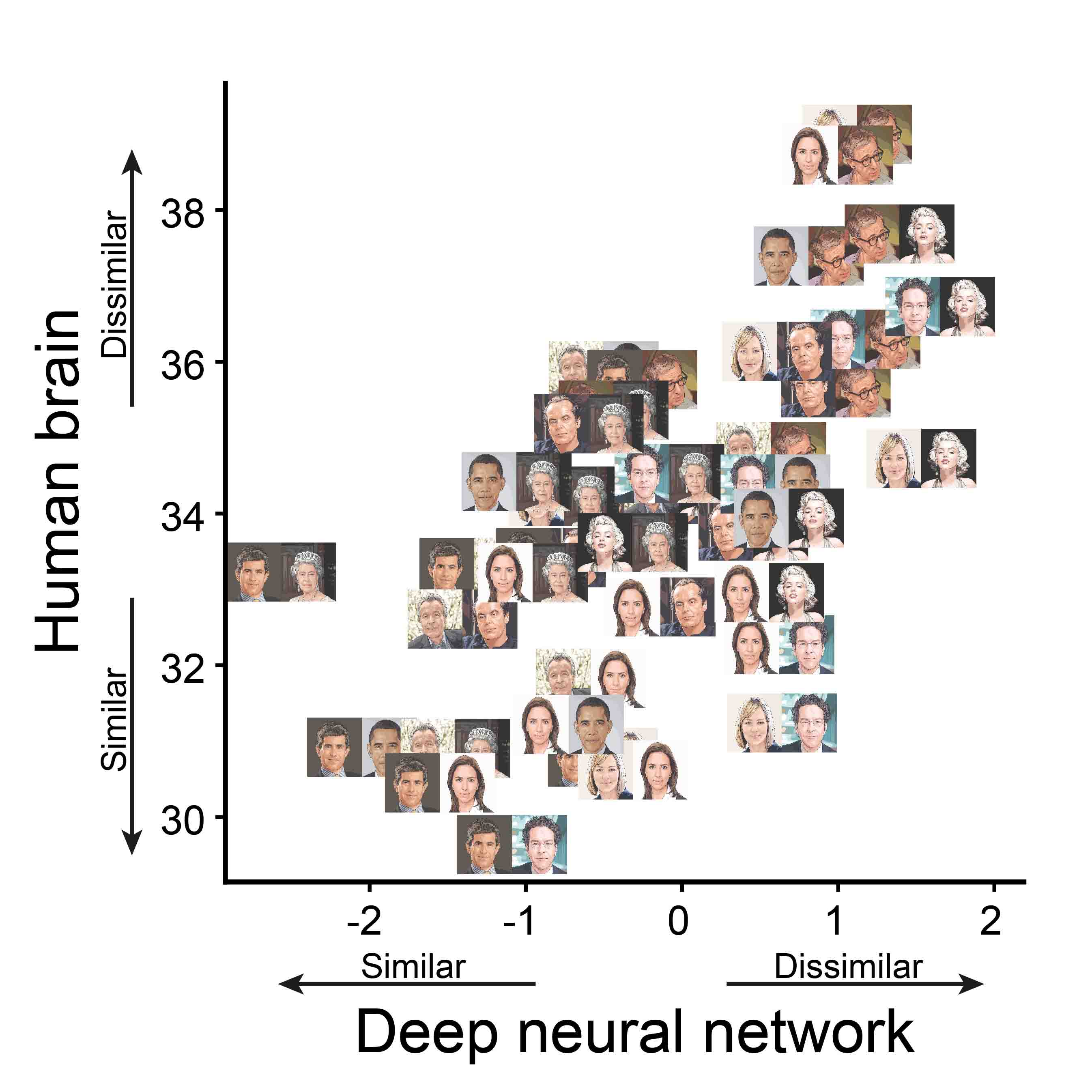

Pairs of faces plotted on a graph according to similarity. The vertical axis shows the human brain data; the horizontal axis, the data obtained with the deep neural network. Most of the pairs cluster close to the diagonal, showing a strong parallel between the way the brain and the network encode faces. For example, Woody Allen and Marilyn Monroe have very dissimilar representations in both the brain and the network – so in the graph, this pair is placed high on both axes

Intriguingly, the scientists found a striking parallel between the human and artificial systems. It was most prominent in the middle layers of the deep network – those that represent the actual pictorial appearance of the faces rather than the more abstract personal identity of the face owners.

“It’s highly informative that two such drastically different systems – a biological and an artificial one; that is, the brain and a deep neural network – have evolved in such a way that they possess similar characteristics,” says Prof. Malach. “I would call this convergent evolution – just as manmade airplanes show similarity to those of wings of insects, birds, and even mammals. Such convergence points to the crucial importance of unique face-coding patterns in face recognition.”

“Our findings support the hypothesis that distinct activation patterns of neurons in response to different faces, as well as the relationship between these patterns, play a key role in the way the brain perceives faces,” says Grossman. “These findings can help advance our understanding of how face perception and recognition are encoded in the human brain. On the other hand, they may also help to further improve the performance of neural networks by tweaking them so as to bring them closer to the observed brain-response patterns.”

Study authors also included Michal Harel of the Department of Neurobiology, Prof. Michal Irani of the Department of Computer Science and Applied Mathematics, and Dr. Mehta’s group at the Feinstein Institute for Medical Research.

Prof. Rafael Malach’s research is supported by the Barbara and Morris L. Levinson Professorial Chair in Brain Research; the Dr. Lou Siminovitch Laboratory for Research in Neurobiology; and the estate of Florence and Charles Cuevas.